Building and deploying Large Language Model (LLM) applications for production environments in 2024 involves selecting the right technologies, frameworks, and tools. Based on our experience working on various LLM-based projects—from Retrieval-Augmented Generation (RAG) to agent-driven systems—this guide walks through the entire lifecycle of an LLM application. We cover essential technologies, tools, and emerging trends, enabling you to build, deploy, and scale robust LLM-powered solutions with ease.

LLM API and Self-Hosting Solutions

When selecting an LLM provider, privacy, ease of use, and feature sets are critical considerations. There are two primary options:

Self-hosted LLM: If you want to go the self-hosted route, Ollama is the best choice. You might ask, “Why bother with a self-hosted LLM? Isn’t it more expensive, harder to set up, and produces lower-quality responses?” The answer is simple—privacy.

OpenRouter: If privacy is not a concern, OpenRouter offers a user-friendly LLM aggregator. It supports multiple models through a single interface, allowing seamless switching with features like automatic logging, model comparisons, and free trials. Other LLM providers such as together.ai and Mistral are available, but OpenRouter remains a top choice due to its ease of use and rich feature set.

Data Handling: Preprocessing, Integration, and ETL

Preprocessing and managing data effectively is crucial for ensuring the smooth operation of LLM applications. This involves data connectors, ETL (extract, transform and load) tools, and data processing frameworks for both structured and unstructured data:

- Open-source data connectors & ETL tools:

- Apache NiFi: Automates data flows from various sources.

- Airbyte: Extracts, loads, and transforms data from APIs and databases.

- Closed-source ETL options:

- Fivetran and Stitch: Simplify data pipeline creation through managed services.

- Data processing frameworks:

- Open-source: Apache Spark for large-scale processing and Pandas for smaller datasets.

- Closed-source: Databricks, built on Apache Spark, offers advanced data processing capabilities.

For structured data extraction from unstructured formats, options like Unstructured.io, Llamaparse by Llamaindex, and GPT-4 by OpenAI are excellent choices.

Data Storage and Management

Selecting the appropriate data storage system is essential for handling structured and unstructured data:

- Structured data storage:

- Open-source: PostgreSQL and MySQL for reliable relational databases.

- Closed-source: Amazon RDS for managed PostgreSQL/MySQL solutions.

- Unstructured data storage:

- Open-source: Elasticsearch for indexing/searching unstructured data and MongoDB for handling JSON-like data.

- Closed-source: Amazon OpenSearch and Azure AI Search (formerly Cognitive Search).

RAG Databases

RAG systems are crucial for improving LLM accuracy by addressing challenges like outdated information, hallucinations, and biased responses. The top RAG database solutions are:

- ChromaDB: Ideal for rapid prototyping and proofs of concept, it’s easy to set up and well-documented.

- Supabase: Built on PostgreSQL with the PGVector plugin, it is a robust choice for larger projects requiring scalability and advanced features.

- Milvus: Efficient for vector storage with Milvus Lite for development and Zilliz for production environments.

Embedding Models

Embedding models enhance the retrieval and precision of LLMs. Top options include:

- Cohere Embed v3: Known for superior retrieval recall and precision, especially for financial datasets.

- OpenAI Text Embedding-3-Large: Offers better performance than the previous Ada 002 model and is easier to deploy.

LLM Agents and Frameworks

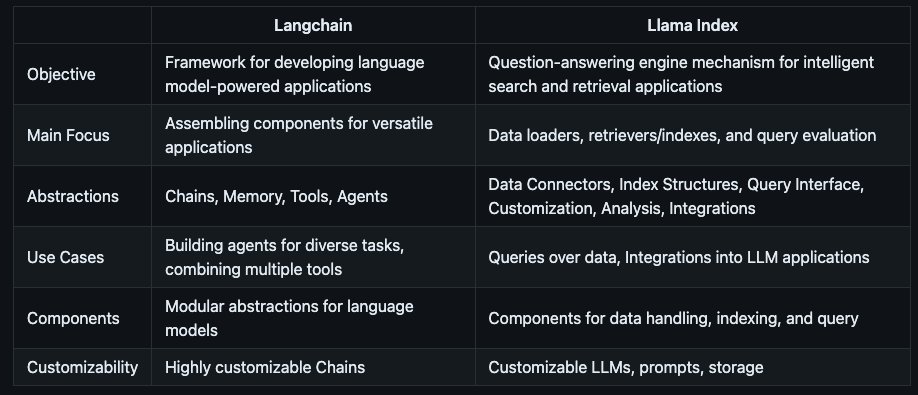

LLM agents allow for sequential reasoning and problem-solving in complex workflows. Key frameworks include:

- LangChain: Popular for its large community and extensive resources. It’s a great starting point for newcomers but can be complex to extend for intricate projects.

- LlamaIndex: Optimized for indexing and retrieval, it’s ideal for RAG pipelines, though not as versatile as LangChain for complex projects.

- Haystack 2.0: A well-structured alternative to LangChain, known for its clarity and responsiveness.

Observability

Monitoring your LLM applications ensures performance, accuracy, and reliability. For this, we recommend:

- LangSmith: User-friendly for tracking inputs and outputs, it’s best suited for smaller projects and prototypes.

- Arize Phoenix: Offers a comprehensive free plan with more extensive dataset tracking but may be less intuitive than LangSmith.

Backend Development and Deployment

For backend development, Python with FastAPI and Pydantic is highly recommended for its performance, type safety, and ease of use. Here’s why:

- FastAPI: Modern and fast, with great documentation and support for essential features like authentication, CORS, and multi-threading.

- Pydantic: Enforces strict typing, ensuring reliability in application endpoints.

Gunicorn and Uvicorn are commonly used worker managers for handling concurrent requests efficiently.

For deployment, Docker is favored for its consistency, scalability, and ease of use. Docker Compose allows for straightforward management of dependencies like databases and proxies. To streamline the deployment process further, Coolify is an excellent choice, offering:

- Automated SSL certificate management

- Easy reverse proxy configuration

- Database backup automation

- CI/CD pipelines integrated with GitHub

- Monitoring and notification tools

Additional Tools

- Authentication: Stytch is a robust option for B2B applications, offering features like OAuth and Magic Links.

- Error Monitoring: Sentry provides effective error tracking and alerts, ensuring application reliability.

- Feature Flags: HappyDevKit enables controlled rollouts and A/B testing for new AI features.

Conclusion

Building a production-ready LLM application in 2024 requires strategic technology choices tailored to individual project needs. This toolkit, which covers everything from LLM APIs and RAG databases to observability and deployment platforms, offers a comprehensive roadmap for creating scalable, reliable, and maintainable LLM applications that are easy to deploy and manage.