In this blog, we will discuss the often-overlooked yet essential role of benchmarking in large language model (LLM) development. A benchmark, or evaluation set, serves as a pre-defined standard to assess an LLM’s performance and is a critical first step in any product development process. I’ll explain why benchmarks help product managers define success criteria, assist engineers in selecting the best open-source models, and reveal performance gaps for improvement. We also explore who should ideally create these benchmarks and how they can guide teams to refine models, bridging gaps in essential abilities like humor detection. Let’s dive into why benchmarking is one of the most critical and insightful tasks in building effective LLM products.

For detailed information, please watch the YouTube video: Why Benchmark is Crucial in LLM Development: Simple Explained

What we call a benchmark, also known as an evaluation set, is a baseline we design in advance to assess the capabilities of an LLM. Constructing a benchmark should be the very first step in developing any LLM product, but it’s often the most easily overlooked step.

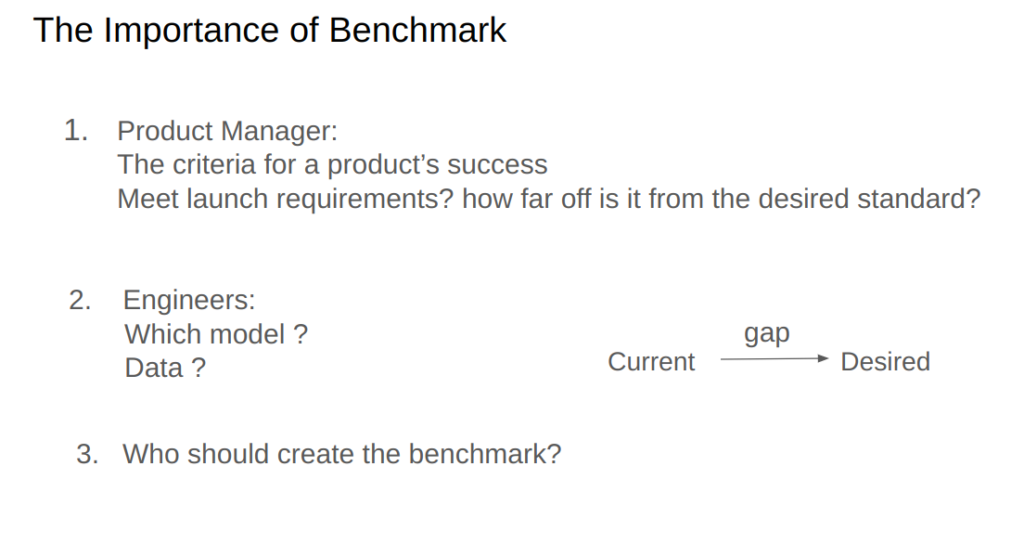

For instance, consider a product manager. For them, it’s essential to clearly define the criteria for a product’s success, as well as to know the performance level the product must reach before launch. If it doesn’t meet launch requirements, how far off is it from the desired standard? To answer these questions, a benchmark is indispensable.

Secondly, for engineers, there are other considerations, such as deciding which open-source model to choose and what kind of data to collect. With a benchmark, these questions become easier to address. Given a benchmark, we can quickly test some of the open-source models available on the market. After testing, we can easily identify which open-source model performs best according to our benchmark. We can then choose one or two of the top-performing ones.

Throughout the benchmarking tests, we can also easily observe the gap between the current model and our ideal model—essentially, the difference between them. This gap represents the area we need to improve through fine-tuning. For example, if part of our benchmark includes data to test a model’s sense of humor, we may find that the current open-source model does not perform well in this area. But ideally, we want the model to have a sense of humor, so a gap exists here. Next, our goal becomes clear: we need to gather more data related to humor. Similarly, if we find a lack of other abilities, we should correspondingly gather datasets to enhance those capabilities.

Now, let’s discuss the third question—who should create the benchmark? Ideally, I believe the responsibility should lie with the product manager, product lead, or project manager. If they are not very familiar with the capabilities of large models, they may need some support from the engineering team. In summary, constructing a benchmark should be the first step for all large-model products. It is also the most important and, at the same time, a task that requires significant insight.