In this blog, we will dive deep into the true costs associated with fine-tuning large language models (LLMs) and explain why GPU resources might not be the most crucial factor. From GPU expenses and labor costs to inference and data collection, I’ll walk you through each element and highlight which factors are most essential for success. By the end, you’ll have a clear understanding of how to approach AI model fine-tuning more effectively, balancing technical resources with business needs. Don’t forget to share your thoughts in the comments!

For detailed information, please watch the YouTube video: Understanding the Costs of Fine-Tuning LLMs: A Practical Guide

When it comes to fine-tuning models, many believe GPU resources are the most critical factor. However, I beg to differ. Let me explain why—and by the end, I’ll highlight what we truly need to focus on.

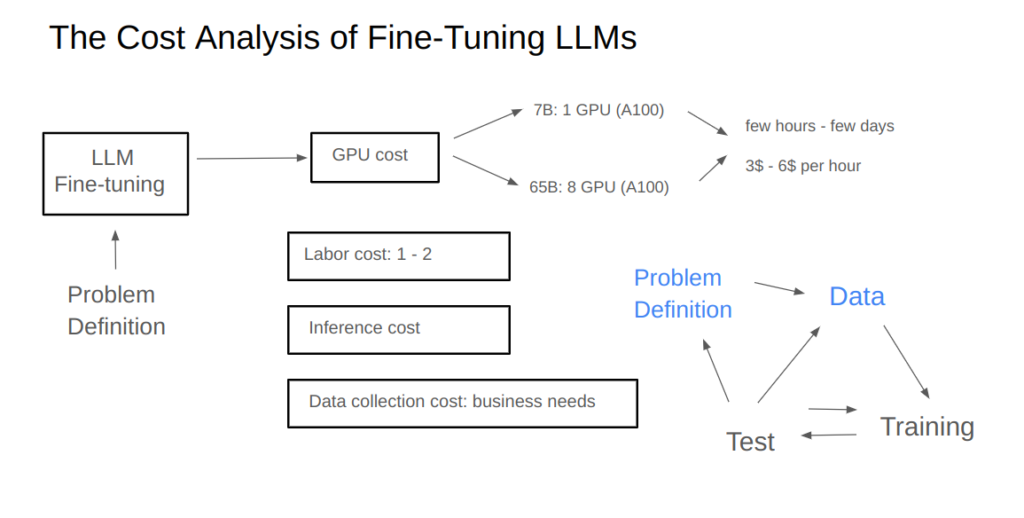

Cost Analysis of Fine-Tuning Models

Fine-tuning costs can be broken down into four key components, assuming we already have a clear problem to solve:

1. GPU Costs

Calculating GPU costs is straightforward. For instance, fine-tuning a 7B parameter LLaMA model generally requires just one GPU, often using efficient methods like LoRA. Larger models, such as a 65B parameter LLaMA, may need around eight GPUs.

- Fine-tuning time: Ranges from hours to days.

- GPU rental rates: Currently, renting an A100 GPU costs $3–$6 per hour.

- Total cost: Can range from tens to thousands of dollars, depending on the model size and fine-tuning duration.

Despite these costs, GPU expenses are generally manageable.

2. Labor Costs

A typical fine-tuning project requires 1–2 engineers, making labor costs relatively predictable.

3. Inference Costs

While inference costs accumulate after deployment, they are usually optimized during the production phase. For now, let’s exclude them and focus on building a successful product.

4. Data Costs

Data collection costs are the most variable and unpredictable:

- If you already have high-quality data, formatting it for fine-tuning is inexpensive.

- If not, you may need to hire labelers or even maintain a web scraping team.

In some cases, data costs can exceed all other costs combined, especially when the data must be collected or labeled from scratch.

The Cost Structure of AI Projects

AI projects typically follow an iterative cycle:

- Data Collection: Gather data and prepare it for fine-tuning.

- Model Training: Train the model with the data.

- Testing and Iteration: Test the model and refine it based on results.

If testing reveals poor performance, we might need to:

- Adjust hyperparameters or methods.

- Revisit data collection to improve quality or volume.

- Reevaluate the problem definition itself.

The true costs lie in two key stages:

- Problem Definition: Is the problem well-defined and achievable with current technology?

- Data Selection: Is the data appropriate and of sufficient quality?

These two factors are closely tied to business needs and significantly impact project outcomes. Companies that neglect them often spend heavily with little to show for it.

Why GPUs Are Not the Bottleneck

Despite common beliefs, GPU costs are a minor factor compared to the challenges of aligning technology with business objectives. The true difficulty lies in:

- Selecting the right problems to solve.

- Ensuring the data is tailored to those problems.

By focusing on these two areas, businesses can save substantial time and resources.

Bridging Technology and Business

The technological barriers to fine-tuning are not as daunting as they seem. The real challenge is integration:

- Aligning product goals with technological capabilities.

- Continuously adapting to an ever-evolving AI landscape.

Clarity on problem definitions and data quality can make or break a project. Companies must invest time and effort here to ensure meaningful results.